LAI #96: From Building LLMs by Hand to Smarter Agent Patterns

Explainable AI, LangGraph design patterns, the rise of small language models, and AWS-powered multi-agent systems.

Good morning, AI enthusiasts!

AI isn’t just about bigger models; it’s about building smarter, more trustworthy systems. This week, we start with the fundamentals: a step-by-step guide to creating an LLM from scratch in PyTorch, showing how every piece of the architecture fits together. From there, we look at what it takes to make AI explainable and human-centric, and why this is no longer optional in high-stakes domains.

The issue also explores practical design choices: applying agentic patterns in LangGraph, using small language models where they outperform larger ones, and scaling multi-agent applications with AWS services. It’s a week that balances low-level engineering with big-picture system design.

Let’s get into it!

What’s AI Weekly

Over the last week, I have shared a few ‘AI in a minute’ videos covering all the major developments in AI, such as AgentKit, Sora 2, Tinker, Claude Sonnect 4.5, and a lot more. I share my thoughts on why these matter and how they will impact your interactions with AI and LLMs. Find all the videos here!

— Louis-François Bouchard, Towards AI Co-founder & Head of Community

Learn AI Together Community Section!

Featured Community Post from Discord

Xecho1337 has built Brain4J, a new machine learning framework for Java. It’s lightweight and designed for speed with custom parallel operations and a GPU-ready design. He is also working on future updates that will have Transformers, LSTMs, RNNs, Liquid Neural Networks (LNNs), and better GPU acceleration. Check it out on GitHub and support a fellow community member. If you have any suggestions or questions, connect with him in the thread!

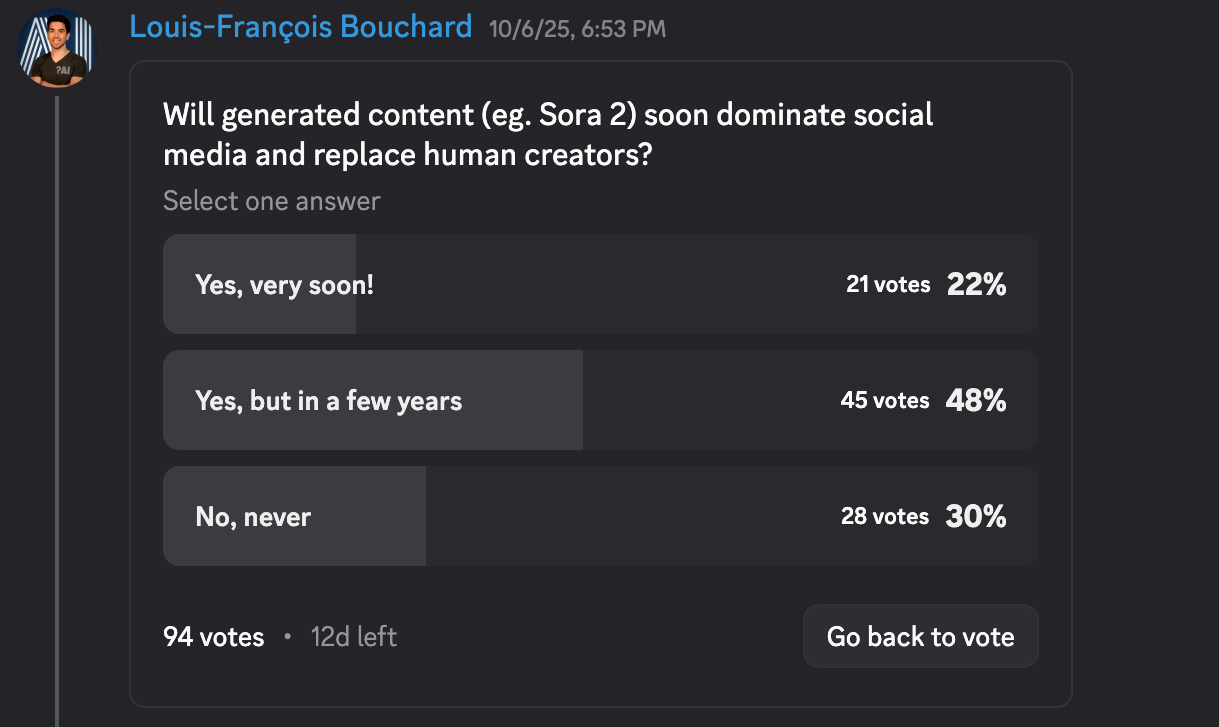

AI poll of the week!

The room leans “yes, just not yet.” Most expect AI to overrun the feed-filler content first (ads, b-roll, meme reels), while human creators maintain an edge where voice, trust, and live presence matter.

Name the first format you’d hand mostly to AI and the real-world trigger that would make you do it (e.g., indistinguishable quality on a second watch, overnight turnaround at the same budget, platform distribution boosts, or credible trust signals like watermarks/disclosure). Let’s discuss in the thread!

Collaboration Opportunities

The Learn AI Together Discord community is flooded with collaboration opportunities. If you are excited to dive into applied AI, want a study partner, or even want to find a partner for your passion project, join the collaboration channel! Keep an eye on this section, too — we share cool opportunities every week!

1. Andreas532707 is looking for a partner to build an AI project. If you are a beginner starting your first project, connect with him in the thread!

2. Tigerlily6686 is working on a discovery platform and needs help with figuring out the best no/low-code tools that can handle sign-on for both sides, and then someone to help with coding. If you want to join the project and help, reach out to him in the thread!

Meme of the week!

Meme shared by atvenomoth

TAI Curated section

Article of the week

No Libraries, No Shortcuts: LLM from Scratch with PyTorch by Ashish Abraham

This walkthrough details the process of creating a custom Transformer-based LLM using only PyTorch. It systematically breaks down the decoder-only architecture, explaining and implementing key components like self-attention, positional encoding, and multi-head attention. The process covers the complete model lifecycle, from pretraining on a general dataset to understand language, to fine-tuning on a specialized corpus to adopt a specific style. It also covers practical aspects of training, such as loss calculation, optimizers, and learning rate scheduling (with code examples).

Our must-read articles

1. Explainable, Responsible & Human-Centric AI: The Complete Senior Developer’s Guide by Rohan Mistry

As AI systems become increasingly complex, this guide outlines the shift in engineering toward explainable, human-centric models. It moves beyond a sole focus on accuracy to address the “black box” problem in high-stakes fields like healthcare and finance. It covers technical foundations such as SHAP and LIME, principles for fair and transparent design, and the production challenges of implementing these systems at scale, including performance bottlenecks and model drift. It also reviews the increasing regulatory pressures, positioning auditable and trustworthy AI as a core responsibility for developers in the current landscape.

2. Agentic Design Patterns with LangGraph by Hamza Boulahia

This article demonstrates how to build more robust and scalable systems using LangGraph by implementing several agentic design patterns. It outlines methods for sequential tasks with Prompt Chaining, conditional logic with Routing, and concurrent processing through Parallelization. It also explains how to enable self-correction with Reflection, integrate external APIs via Tool Use, and coordinate tasks using Planning and Multi-Agent Collaboration. Each concept is accompanied by code examples.

3. Small Language Models Are the Future of Agentic AI: Here’s Why by MKWriteshere

For production AI agents, using large language models for simple tasks is an inefficient approach. This article suggests adopting specialized Small Language Models (SLMs) with fewer than 10 billion parameters, which can deliver better performance on specific functions while significantly reducing operational costs. It details a hybrid architecture where a large model orchestrates complex requests, while various fine-tuned SLMs handle the specialized execution of sub-tasks. It also provides a practical migration roadmap, centered on a continuous improvement cycle of logging data, identifying task patterns, and deploying specialized models for greater efficiency.

4. Building a Multi AI Agents application using the Amazon Gen AI Dream Team (Bedrock, Strands, AgentCore, and Q Developer) by Luis Parraguez

This article outlines the process of developing a multi-agent AI application that generates service proposals by leveraging a suite of AWS services. The author transitioned from an initial implementation using Amazon Bedrock Agents to a more sophisticated architecture, utilizing AWS Strands for workflow control and Amazon Bedrock AgentCore for production deployment. Key developments included introducing a validator agent to assess input quality, creating a review loop for refined outputs, and implementing thorough observability through logging and tracing.

If you want to publish with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

AI will continue to come close to replicating human thought and behaviour but will never succeed, as clothes out of the dryer will never smell like clothes off the line.