LAI #87: Recurrent Memory, Agentic RAG, and Evaluating LLM Writing

And hands-on multimodal collabs: open-source diffusion training, GUI dataset workflows, and more!

Good morning, AI enthusiasts,

This week’s issue highlights how researchers and the community are stretching what’s possible across architectures, workflows, and open collaboration.

We look at:

A new class of recurrent networks that matches transformer quality with less compute

How agentic RAG is evolving beyond static retrieval into structured reasoning

Writing benchmarks that evaluate LLMs on clarity, coherence, and emotional tone

A full walkthrough on choosing the right LLM for content tasks, with examples

Whether you’re scaling production apps or running experiments on your own stack, this one’s packed with actionable ideas.

Let’s get into it.

What’s AI Weekly

This week, I’ve started sharing short-form videos that cover daily AI updates in under a minute. If you want a quick, no-fluff way to stay in the loop, you can now follow along on your platform of choice: Instagram, YouTube, or TikTok.

The latest one breaks down OpenAI’s unexpected move toward open-source with GPT-OSS.

Watch it here, and if it helps you stay sharper, hit subscribe so you never miss a key update.

— Louis-François Bouchard, Towards AI Co-founder & Head of Community

This issue is brought to you thanks to Snyk:

Join Securing Vibe Coding: Addressing the Security Challenges of AI-Generated Code on August 28 at 11 am ET with Snyk.

In this live session, host Sonya Moisset will cover:

• The security implications of Vibe Coding

• Actionable strategies to secure AI-generated code at scale, and

• How Snyk secures your AI-powered SDLC from code to deployment

Plus, ISC2 members will earn 1 CPE credit for attending live. Join Here!

Learn AI Together Community Section!

Featured Community post from the Discord

.lightm is working on DiffuLab, a library designed to provide a simple and flexible way to train diffusion models while allowing full customization of its core components. Future updates will introduce additional functionalities, such as adapters, fine-tuning with LoRA, and feature injection into the architecture for specialized inference scenarios. Check it out and support a fellow community member. If you have any feedback or want to contribute, message in the thread!

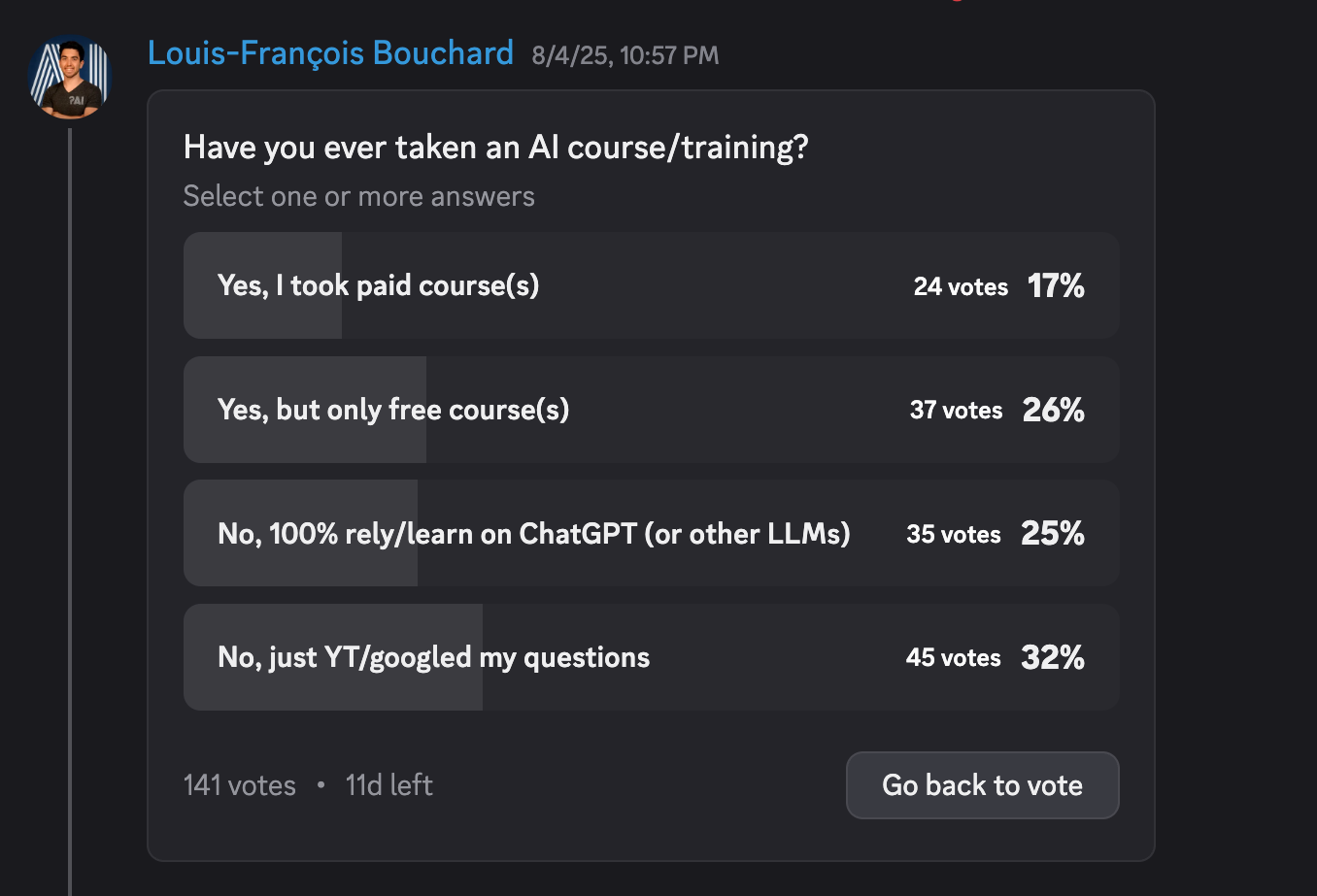

AI poll of the week!

It’s interesting to see that 57% of respondents are still learning AI primarily through self-directed exploration, YouTube, Google, or directly prompting an LLM, rather than any structured course.

If you’ve mostly relied on self-teaching (searching or prompting), what’s the biggest limitation you’ve run into? Is it a lack of depth, no feedback on your work, or something else entirely? Tell me in the thread!

Collaboration Opportunities

The Learn AI Together Discord community is flooded with collaboration opportunities. If you are excited to dive into applied AI, want a study partner, or even want to find a partner for your passion project, join the collaboration channel! Keep an eye on this section, too — we share cool opportunities every week!

1. Mahmoudsakr01 is looking for partners to learn data visualization and make dashboards. If this is your focus too, reach out to him in the thread!

2. Dawntasy is an independent ML/DL/AI researcher who has created a proposal for a new deep learning architecture for training LLMs alongside the Transformer. He is looking for an experienced, highly skilled deep learning engineer/coder who has advanced expertise in PyTorch/TensorFlow. If that sounds like you, connect with him in the thread!

3. Lame_0039 wants help brainstorming and training on GUI structures, color differences, shapes, and movement distinctions. The objective is to develop a lightweight model capable of detecting the bounding boxes of GUI elements (such as buttons, icons, and text) and also capturing motion/depth. If you want to collaborate on this project, contact them in the thread!

Meme of the week!

Meme shared by sysop1984_26148

TAI Curated section

Article of the week

Advanced Recurrent Neural Network Architectures for Sequential Data Modeling By Kuriko Iwai

This piece examines several advanced Recurrent Neural Network (RNN) architectures to address challenges in modeling long-term dependencies. It explains how standard RNNs face issues like the vanishing gradient problem and introduces more sophisticated solutions. It also covers the mechanisms of Long Short-Term Memory (LSTM), Gated Recurrent Units (GRU), and Bidirectional RNNs, highlighting how they manage information flow.

Our must-read articles

1. I Trained My Agent To Learn From Each Other, And It Blew My Mind By Gao Dalie (Ilyass)

This article presents Agent KB to address the issue of isolated learning in AI. Agent KB is a shared knowledge base that allows different multi-agent systems to learn from a collective pool of experiences. The system uses a “Reason-Retrieve-Refine” pipeline where a Student Agent first retrieves high-level strategies for a new task. If errors occur, a Teacher Agent analyzes them and retrieves specific, step-level solutions from past experiences. This approach facilitates cross-domain knowledge transfer, enabling agents to adapt proven solutions and continuously improve performance by learning from a shared, evolving repository of information.

2. Implementing Agentic RAG using LangGraph, Groq & FastAPI By A.Venkatesh

This piece details how to build an agentic system with intelligent decision-making capabilities. The author uses LangGraph to manage a workflow where an agent first assesses user queries. Based on this, the system dynamically decides whether to retrieve documents, rewrite the question for better results, or bypass retrieval for irrelevant topics. This process, powered by Groq, FAISS, and distinct nodes for grading and generation, is delivered through a FastAPI backend, creating a more efficient and context-aware chatbot application.

3. How to Choose the Best LLM for Writing By Fabio Chiusano

An analysis of LLM writing performance suggests that a model’s emotional intelligence is a strong predictor of its overall quality. The author traces the evolution of evaluation from static tests to AI-judged benchmarks like EQ-Bench, which assess capabilities for creative, long-form, and emotionally complex writing. It offers leaderboards showing top-performing models for specific tasks, such as Gemini 2.5 Pro for long-form content. While these benchmarks provide a valuable starting point for selection, the author recommends that writers test top candidates on their own projects to determine the most effective tool for their needs.

4. Context Engineering in Action: Four System Implementations Transforming AI By MKWriteshere

An analysis details four key AI system implementations transforming how models manage context. It explains how Retrieval-Augmented Generation (RAG) provides access to current information, while Memory Systems enable persistent learning from past interactions. The piece also explores Tool-Integrated Reasoning, which allows models to use external applications to complete tasks. Finally, it covers Multi-Agent Systems, where specialized agents coordinate to solve complex problems. These interconnected architectures are creating more adaptive AI systems that can understand, remember, and act upon information more effectively.

If you want to publish with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

Hi Louis-Francois, I was wondering if you would be interested in participating in our research about the future of AI in Creative Industries? Would be really keen to hear your perspectives. It only takes 10mins and I am sure you will find it interesting.

https://form.typeform.com/to/EZlPfCGm