#57 Are LLMs Really the Magical Fix for All Your Problems?

Does every company actually need one?

Good morning, AI enthusiasts! When we launched our ‘Beginner to Advanced LLM Developer Course,’ many of you asked if you were late to the AI Wagon. Well, I feel the LLM revolution is just starting, and there’s no better time to start. By learning how LLMs work and how to build with them now, you’re gaining a first-mover advantage in a field that will only grow. That said, some people have questioned the business risk and defensibility of building on top of LLMs and dismissively write them off as “wrapper” businesses. This might be true only if you don’t know where to use LLMs and where NOT to. This week, I am diving into that to help you understand where this technology can do wonders and where it might fail.

What’s AI Weekly

While I love LLMs, sometimes they’re just way too overkill and use way too much compute and money when you could’ve used something much simpler. This week, in What’s AI, I dive into where LLMs truly shine and, more importantly, where they might fall short, along with the trade-offs you need to consider. This should give you a clear idea of whether or not LLMs are the right fit for your problem. Watch the full video on YouTube or read the article here.

— Louis-François Bouchard, Towards AI Co-founder & Head of Community

Learn AI Together Community section!

Featured Community post from the Discord

Lucasgelfond implemented Facebook Research’s model, segment-anything 2, using webGPU. It runs completely client-side; no data is sent to the server. You can try a demo here or implement it yourself with the repository. If you have any questions or suggestions, share them in the thread!

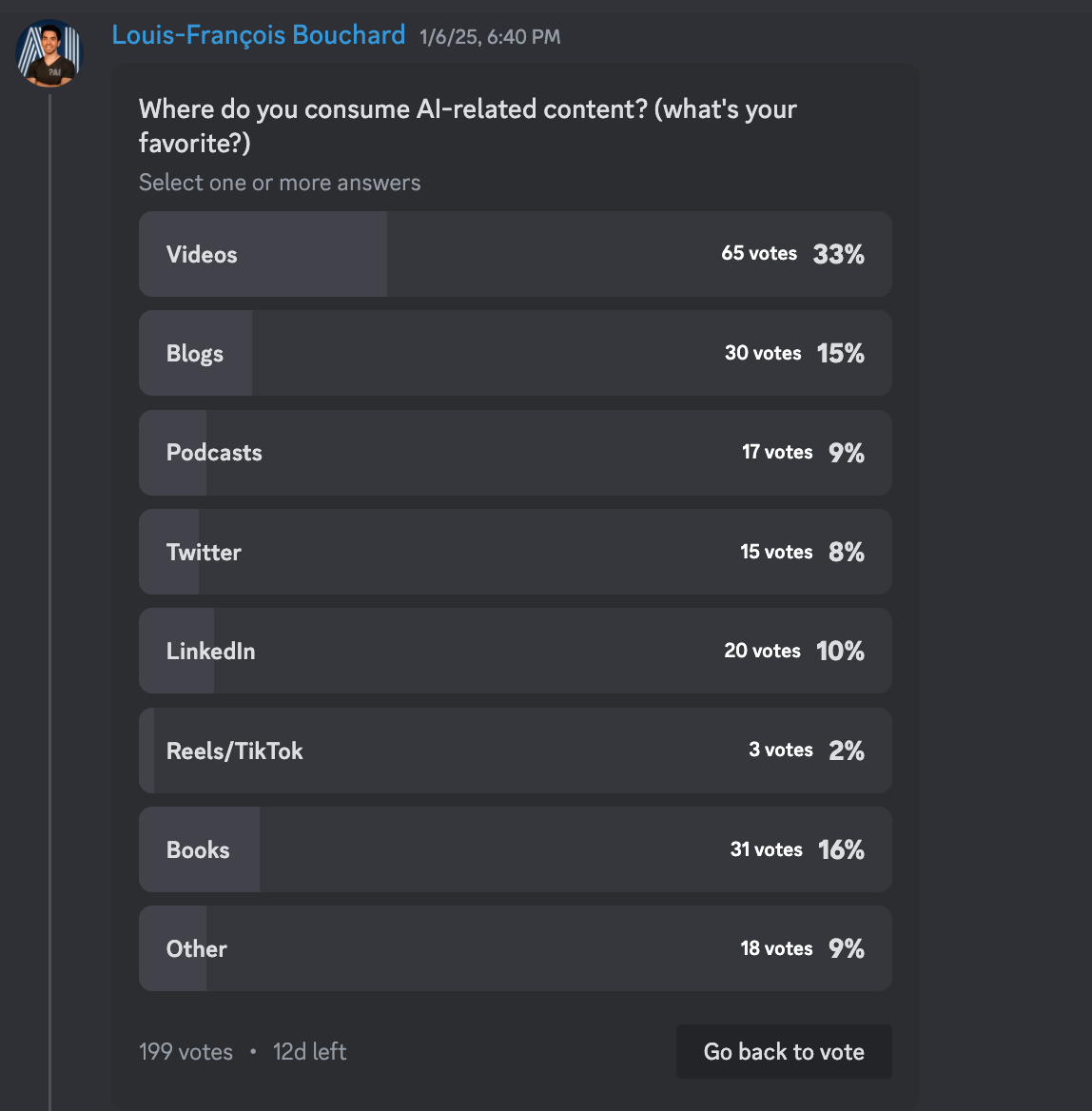

AI poll of the week!

We would love to know your favorites and help the community find more resources and learn together. Share a list in the thread!

Collaboration Opportunities

The Learn AI Together Discord community is flooding with collaboration opportunities. If you are excited to dive into applied AI, want a study partner, or even want to find a partner for your passion project, join the collaboration channel! Keep an eye on this section, too — we share cool opportunities every week!

1. Harryyyy9049 is working on an architectural management platform. If you are comfortable working with deep learning models and architectures and want to know more, reach out to him in the thread!

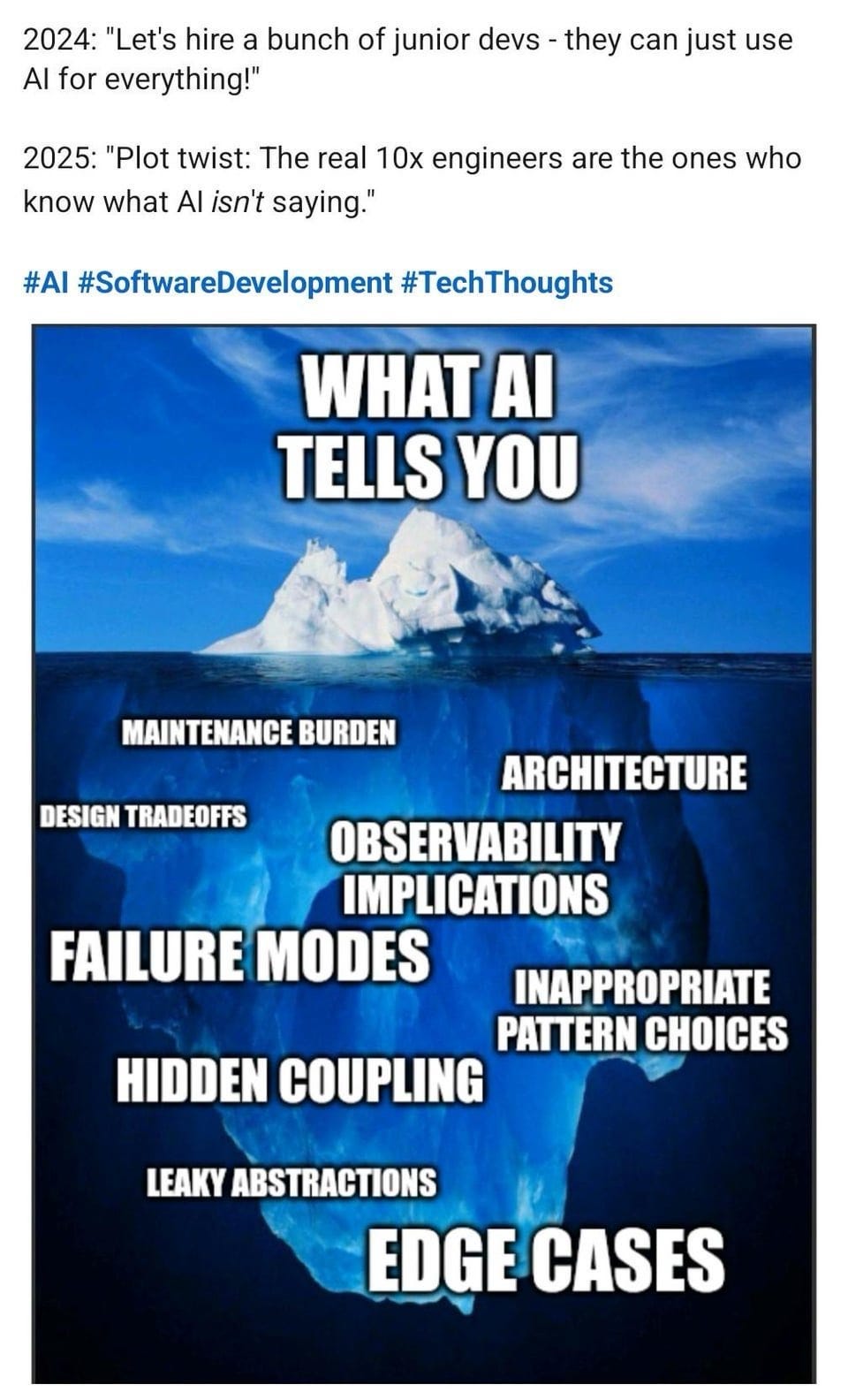

Meme of the week!

Meme shared by ghost_in_the_machine

TAI Curated section

Article of the week

Kolmogorov-Arnold Networks: Exploring Dynamic Weights and Attention Mechanisms By Shenggang Li

This article explores Kolmogorov-Arnold Networks (KAN), a neural network architecture based on the Kolmogorov-Arnold representation theorem. It details KAN’s construction and training, emphasizing its ability to decompose complex multivariate functions into simpler univariate ones. It then introduces dynamic weight adjustments, enhancing KAN’s adaptability to varying inputs, and compares this approach to a spline-based method via a coding example, demonstrating improved performance metrics (AUC, KS Statistic, Log Loss). Finally, It investigates the relationship between KAN and attention mechanisms, proposing an Attention-KAN model that integrates softmax normalization and dynamic interactions. Experiments comparing different normalization functions (Softmax, Softplus, Rectify) within the Attention-KAN architecture are presented, highlighting the Rectify function’s superior classification performance. It concludes by suggesting avenues for future research, including testing KAN on diverse datasets and optimizing the Attention-KAN architecture.

Our must-read articles

1. KAG Graph + Multimodal RAG + LLM Agents = Powerful AI Reasoning By Gao Dalie (高達烈)

This article explores the Knowledge-Aware Graph Generator (KAG) framework, an open-source system enhancing Retrieval Augmented Generation (RAG) for improved question-answering. KAG addresses RAG limitations by integrating knowledge graphs, enabling more accurate and relevant responses, particularly for complex, multi-hop queries. It uses a hybrid reasoning engine combining large language model reasoning, knowledge graph reasoning, and mathematical logic, significantly outperforming other RAG methods in benchmark tests. It details KAG’s architecture, including its knowledge representation, mutual indexing, and logical-form-guided reasoning. A step-by-step guide demonstrates building a KAG-powered chatbot using Docker, Neo4j, and an LLM, showcasing its ability to process diverse data types (PDFs, charts, images) and answer complex questions accurately. The author contrasts KAG with GraphRAG, highlighting KAG’s superior performance in professional domains due to its enhanced semantic reasoning and tailored natural language processing capabilities. It concludes by noting KAG’s ongoing development and potential for further improvement and customization.

2. Combating Misinformation with Responsible AI: A Fact-Checking Multi-agent Tool Powered by LangChain and Groq By Vikram Bhat

This article details a multi-agent fact-checking tool built using LangChain and Groq. The tool uses a multi-agent architecture, with specialized agents for evidence gathering (via Google Search and Wikipedia APIs), summarization (using a ChatGroq model), fact-checking, and sentiment analysis (using TextBlob). The entire process is integrated into a Streamlit interface, allowing users to input claims and receive a comprehensive analysis, including evidence, summaries, verdicts, and sentiment scores. While acknowledging limitations like reliance on data quality and potential misinterpretations of nuanced language, the author highlights the tool’s potential in combating online misinformation.

3. Building Multimodal RAG Application #6: Large Vision Language Models (LVLMs) Inference By Youssef Hosni

This article, part six of a series on multimodal RAG applications, focuses on Large Vision Language Models (LVLMs) inference within an RAG framework. It details setting up the environment using Python libraries like pathlib, urllib, PIL, and IPython. Data preparation involves downloading images and using metadata from Flickr and previous articles. It then explores several LVLMs use cases, including image captioning, visual question answering, and querying images based on embedded text or associated transcripts, using the LLaVA model via Prediction Guard. Each use case provides example code and demonstrates LVLMs’ ability to process and understand visual and textual information in various contexts, culminating in a multi-turn question-answering example.

If you want to publish with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.