Good morning, AI enthusiasts! An interesting ongoing discussion in the community is around bias in AI. Currently, we are very close to releasing the most comprehensive practical LLM Python developer course out there: From Beginner to Advanced LLM Developer — 85+ lessons progressing all the way from dataset collection and curation to deployment of a working advanced LLM pipeline that addresses precisely these issues in LLMs such as biases and hallucinations. More on this next week! Meanwhile, let’s start this week by addressing this bias “issue.” We have also compiled the best of deep learning research, deep learning vision models, time series forecasting, and more!

What’s AI Weekly

This week in High Learning Rate, my other newsletter, I explore the world of bias in AI — one of the many challenges that can make or break an AI system. In AI, biases are not inherently good or bad; they simply represent a tendency that impacts model decision-making. Whether that impact is positive or negative depends entirely on how they’re managed. Read the complete article to understand the term better and learn to create accurate, ethical, and effective systems.

— Louis-François Bouchard, Towards AI Co-founder & Head of Community

Learn AI Together Community section!

AI poll of the week!

We completely agree that Bias is inevitable, but we also believe its impact, positive or negative, depends entirely on how it’s managed. What, according to you, is the necessary bias? Share it in the thread!

Collaboration Opportunities

The Learn AI Together Discord community is flooding with collaboration opportunities. If you are excited to dive into applied AI, want a study partner, or even want to find a partner for your passion project, join the collaboration channel! Keep an eye on this section, too — we share cool opportunities every week!

1. Akeshav is looking for developers and data experts to build a website like IndiaStats. The website will focus on aggregating and visualizing district/state-level data in a clean, user-friendly interface. If this sounds fun to you, contact them in the thread!

2. Ansh7974 has written a paper on multimodal large language models and needs someone to review it and make the necessary changes for publication. If you can help with this, reach out in the thread!

3. Swangaaw4 is looking for a learning partner to study AI technologies. If you want to study together, connect in the thread!

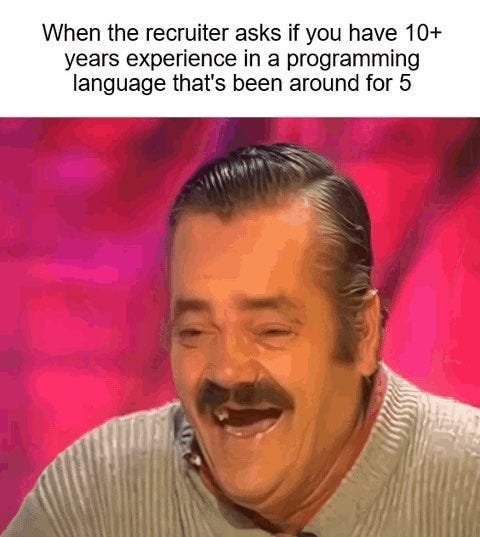

Meme of the week!

Meme shared by ghost_in_the_machine

TAI Curated section

Article of the week

Time Series Forecasting with Genetic Algorithms: A Novel Approach by Shenggang Li

This article explores the application of genetic algorithms (GAs) for time series forecasting, presenting two methods: optimizing predicted values directly and optimizing model parameters. It includes a straightforward example of direct prediction and introduces an enhanced GA approach that features recency weighting, seasonality encoding, and autoregressive elements. Demonstrated with code, this improved method is compared to traditional forecasting techniques using an energy consumption dataset, showing superior performance for short and medium-term predictions. The potential for further advancements in GA applications through hybrid techniques, feature engineering, and multi-objective optimization is also discussed.

Our must-read articles

1. Vision Embedding Comparison for Image Similarity Search: EfficientNet vs. ViT vs. VINO vs. CLIP vs. BLIP2 by Yuki Shizuya

This article compares the performance of five different deep learning models — EfficientNet, ViT, DINO-v2, CLIP, and BLIP-2 — in image similarity search tasks. It explores the differences in embeddings generated by these models based on various architectures and training methods. The models are evaluated using the Flickr30k dataset and the Faiss library for approximate nearest neighbor search. DINO-v2 and BLIP2 demonstrate superior performance in capturing image semantics, with DINO-v2 focusing on objects and BLIP2 excelling at understanding the context beyond pixel information.

2. Instruction Fine-Tuning LLM using SFT for Financial Sentiment: A Step-by-Step Guide by Youssef Hosni

This article provides a step-by-step guide for fine-tuning a large language model (LLM) for financial sentiment analysis. It uses the Facebook/opt-1.3b model and the FinGPT sentiment dataset from Deep Lake for training. It covers the process of setting up the environment, loading the dataset, initializing the model and trainer, and fine-tuning using Supervised Fine-Tuning (SFT) with LoRA (Low-Rank Adaptation) and then demonstrates how to run inference and apply the fine-tuned model to real-world financial data.

3. Deep Learning’s Greatest Hits (Vol. 2) by Zoheb Abai

This article explores the key research papers that have shaped the development of deep learning, focusing on advancements in natural language processing (NLP), computer vision, and generative and multimodal learning. It starts by tracing the evolution of NLP from basic statistical models to the emergence of transformer-based architectures and large language models like BERT and GPT-3. The author also dives into the advancements in computer vision, highlighting the impact of convolutional neural networks (CNNs) like AlexNet, VGG, and ResNet, along with the rise of object detection and segmentation models like R-CNN, Faster R-CNN, Mask R-CNN, YOLO, and U-Net.

If you want to publish with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.